(UroToday.com) The 108th Scientific Assembly and Annual Meeting of the Radiological Society of North America (RSNA) held in Chicago, IL was host to a plenary session discussing machine learning in radiation oncology clinical trials and clinical practice. Dr. Ruijiang Li discussed the latest advances in machine learning and imaging analytics for personalized cancer care.

Dr. Li began his presentation by highlighting the goals of machine learning and imaging analytics in this space:

- Cancer detection

- Cancer diagnosis

- Prediction of response

- Prediction of prognosis

He referenced work by Hosney et al that summarized contemporary artificial intelligence methods in medical imaging, with a focus on the diagnosis of a suspicious object as either benign or malignant:1

- The first method relies on engineered features extracted from regions of interest on the basis of expert knowledge. Examples of these features in cancer characterization include tumour volume, shape, texture, intensity and location. The most robust features are selected and fed into machine learning classifiers.

- The second method uses deep learning and does not require region annotation — rather, localization is usually sufficient. It comprises several layers where feature extraction, selection and ultimate classification are performed simultaneously during training. As layers learn increasingly higher-level features, earlier layers might learn abstract shapes such as lines and shadows, while other deeper layers might learn entire organs or objects. Both methods fall under radiomics, the data-centric, radiology-based research field.

But how do we ensure that such AI methods get adopted in clinical practice? The emphasis must be three-fold:

- Technical validity: Imaging protocols must be robust

- Clinical validity: Generalizable to different patient populations

- Clinical utility

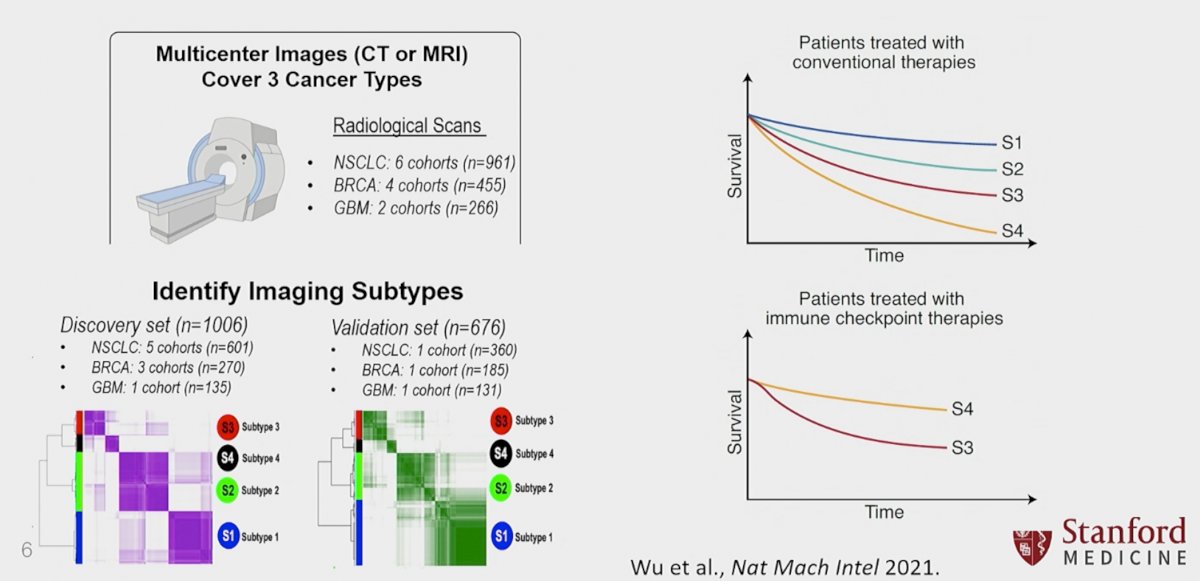

The field of radiomics, which refers to the high-throughput extraction of quantitative features from radiological scans, is being increasingly adopted to search for imaging biomarkers for the prediction of various clinical outcomes. However, current radiomic signatures suffer from limited reproducibility and generalizability, as most features are dependent on imaging modality and tumour histology, making them sensitive to variations in scan protocols. As such Wu et al. developed and validated four imaging/radiomics-based models that allow for the use of imaging as both a prognostic and predictive biomarker in patients with lung cancer, across various pathologic subtypes and treatment modalities.

Another study by We et al. demonstrated that radiomics, via imaging-based machine learning, can predict tumor subtypes with distinct responses and outcomes. The authors used axial imaging in the form of CT or MRI to evaluate three types of malignancies (non-small cell lung cancer, breast cancer, glioblastoma multiforme). The authors split the cohort into discovery (n=1,006) and validation sets (n=676). Using imaging findings as demonstrated below, the authors were able to accurately predict outcomes in patients treated with both conventional and immune checkpoint therapies.

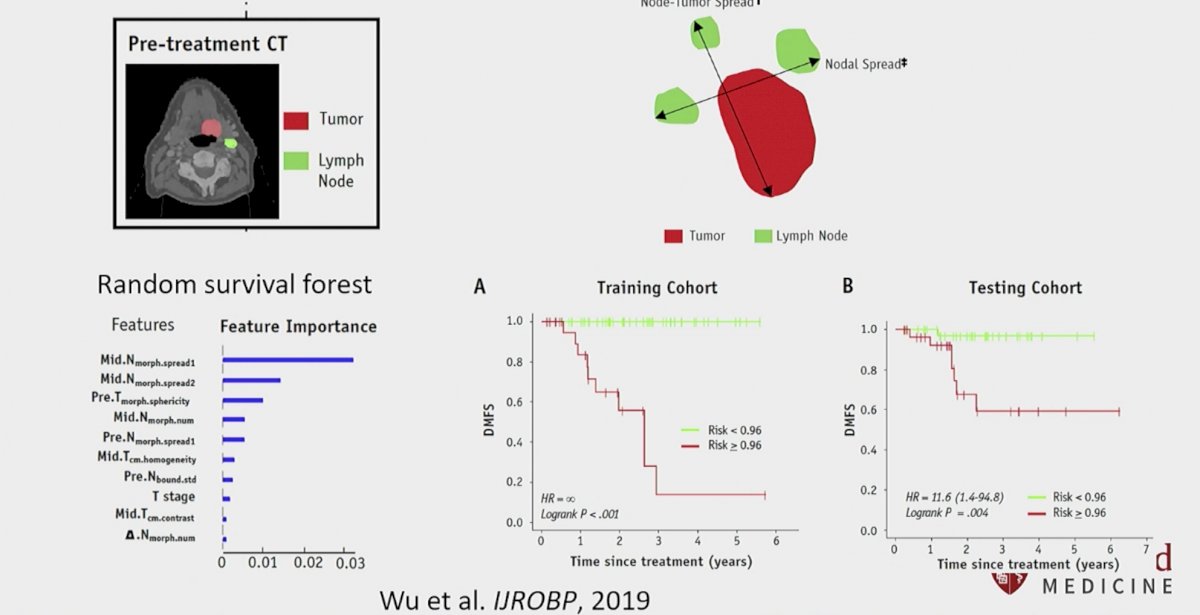

Another interesting application of radiomics in the field of oncology was demonstrated in patients with head and neck malignancies. By using the measured distance between the tumor and enlarged lymph nodes, along with their morphologic characteristics, the authors were able to prognosticate distant relapse rates in patients with oropharyngeal cancer. In this retrospective, single institutional study, the authors included 140 patients with oropharyngeal cancer treated with definitive concurrent chemoradiotherapy, for whom both pre- and mid-treatment contrast-enhanced computed tomography scans were available. Patients were divided into separate training and testing cohorts. Forty-five quantitative imaging features were extracted to characterize tumor and involved lymph nodes at both time points. By incorporating both imaging and clinicopathological features, a random survival forest model was built to predict distant metastasis–free survival. The authors demonstrated that the maximum distance among nodes, maximum distance between tumor and nodes at mid-treatment, and pretreatment tumor sphericity significantly predicted distant metastasis–free survival with a good combined discriminatory ability (c-index=0.73, p=0.008). Using this model, the authors were able to identify two cohort of patients with distinct two-year distant metastasis–free survival rates, as demonstrated below:2

Results from this analysis have served as the basis for a current study evaluating prognostic imaging signatures in head and neck cancers. A radiomics-based prognostic model using a multi-institutional cohort of 1,771 patients from Stanford, MD Anderson Cancer Center, and the Princess Margaret Cancer Centre is currently being trained, fine-tuned, and internally validated for prediction of oncologic outcomes in patients with head and neck malignancies.

The utility of radiomics has also recently been evaluated for cancer screening purposes, particularly in the breast cancer disease space. While screening mammograms identify breast cancer at earlier disease stages, the interpretation of mammograms is affected by high rates of false positives and false negatives. As such, AI models that can outperform human radiology experts are needed. The authors utilized large representative datasets from the UK and the USA. By using an international dataset, the external validity of this model was enhanced. They demonstrated an absolute reduction of 5.7% and 1.2% (USA and UK) in false positives and 9.4% and 2.7% in false negatives. This AI model outperformed all of the human readers: the area under the receiver operating characteristic curve for the AI system was greater than the AUC-ROC for the average radiologist by an absolute margin of 11.5%. They next ran a simulation in which the AI system participated in the double-reading process that is used in the UK, and found that the AI system maintained non-inferior performance and reduced the workload of the second reader by 88%.3

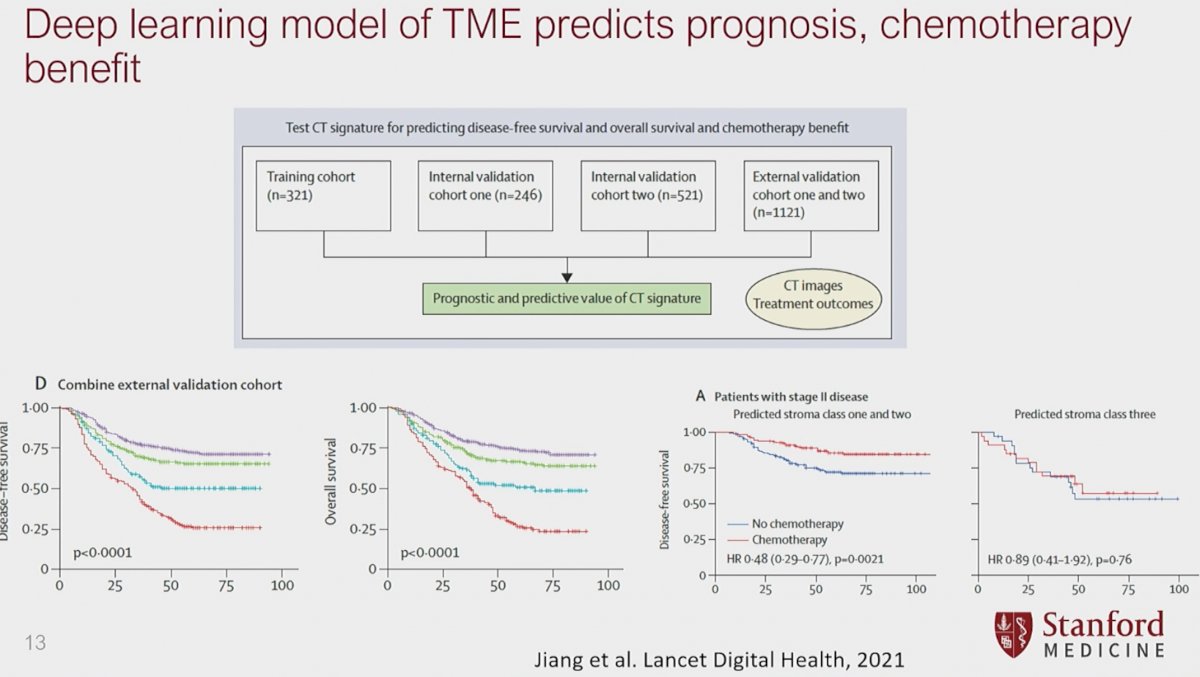

While imaging-informed artificial intelligence appears to have clear promise in guiding treatment decisions and prognoses, can we combine both biology- and imaging-based artificial intelligence to enhance these models? Using CT images and outcomes data from 2,209 patients with resected gastric cancer and available histologic information, Jiang et al. developed a model that was able to predict histologically-based stroma classes using preoperative CT images. They next trained a deep convolutional neural network model using a training cohort and subsequently validated it in internal and external cohorts. The deep-learning model achieved a high diagnostic accuracy for assessing tumour stroma in both internal validation cohort one (ACU: 0·96–0·98) and external validation cohorts(AUC 0·89–0·94). Significantly, the stromal imaging signature was significantly associated with disease-free survival and overall survival in all cohorts (p<0·0001).

Importantly, this deep learning model of tumor microenvironment was able to predict prognosis and which patients would benefit from chemotherapy:

- In patients with stage II or III disease in predicted stroma classes one and two subgroups, patients who received adjuvant chemotherapy had improved survival compared with those who did not (in those with stage II disease hazard ratio [HR] 0·48 [95% CI 0·29–0·77], p=0·0021; and in those with stage III disease HR 0·70 [0·57–0·85], p=0·00042).

It is important to note that human input and artificial intelligence are not mutually exclusive. Human-AI collaborations for precision oncology have demonstrated a superior predictive ability for peritoneal recurrences in patients with advanced gastric cancer, compared to either modality alone.

Dr. Li concluded his presentation by emphasizing that future imaging-based AI models need prospective validation in clinical trials and future efforts need to combine multi-modal/omics data in the oncologic disease space.

Presented by: Ruijiang Li, PhD, Associate Professor (Research), Radiation Oncology - Radiation Physics, Stanford, Palo Alto, CA

Written by: Rashid Sayyid, MD, MSc – Society of Urologic Oncology (SUO) Clinical Fellow at The University of Toronto, @rksayyid on Twitter during the 108th Radiological Society of North America (RSNA) Scientific Assembly and Annual Meeting, Nov. 27 - Dec. 1, 2022, Chicago, IL

References:

- Hosny A, et al. Artificial intelligence in radiology. Nat Rev Cancer. 2018;18(8):500-10.

- Wu J, et al. Integrating Tumor and Nodal Imaging Characteristics at Baseline and Mid-Treatment Computed Tomography Scans to Predict Distant Metastasis in Oropharyngeal Cancer Treated With Concurrent Chemoradiotherapy. Int J Radiat Oncol Biol Phys. 2019;104(4):942-52.

- McKinney SM, et al. International evaluation of an AI system for breast cancer screening. Nature. 2020;577(7788):89-94.

- Jiang Y, et al. Radiographical assessment of tumour stroma and treatment outcomes using deep learning: a retrospective, multicohort study. Lancet Digit Health. 2021;3(6):e371-82.