(UroToday.com) Eugene Shkoylar and his team shared a presentation on their novel deep learning algorithm, CystoNet-T. Each year, nearly 2.2 million cystoscopies are performed in the United States and Europe in the effort against bladder cancer. To minimize the recurrence of tumors, adequate identification of a lesion is crucial to the treatment a physician provides as the ability identify the extent of a tumor can help with stratifying risk, diagnosis, and selecting appropriate treatment options. Given how often this procedure is done, standard cystoscopy misses nearly 15% of bladder tumors. Additionally, three other unmet needs regarding this are under-detection with white light cystoscopy, costs/availability in lower socioeconomic areas, and the experience of the provider. Despite the variabilities listed above, Shkoylar suggests the use of AI could help “democratize” cystoscopy and improve the care that is provided.

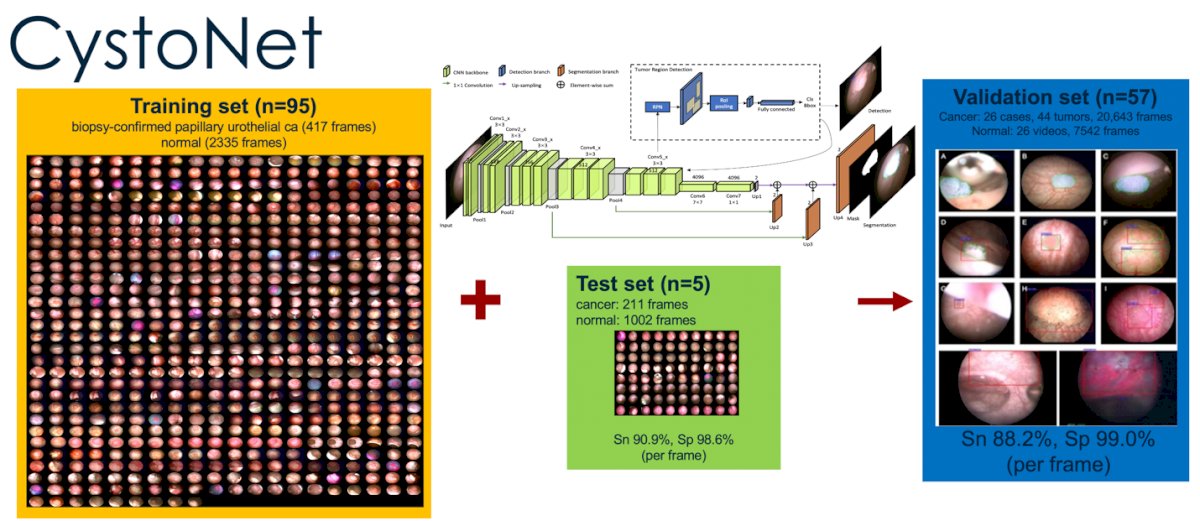

Since 2018, Shkoylar’s team worked to integrate AI and cystoscopy, an effort that yielded a novel system named CystoNet. This program utilized YOLO platform image analysis technology to investigate videos captured during cystoscopy. The program worked in several steps to screen individual frames and outline suspicious growths/tumors (see image below).

The CystoNet trial and its validation set reviled that that program has a respective 88% and 99% sensitivity for detecting bladder cancer based on individual frames. This however did not satisfy Shkoylar’s team, who claimed that these results are “good but not perfect.”

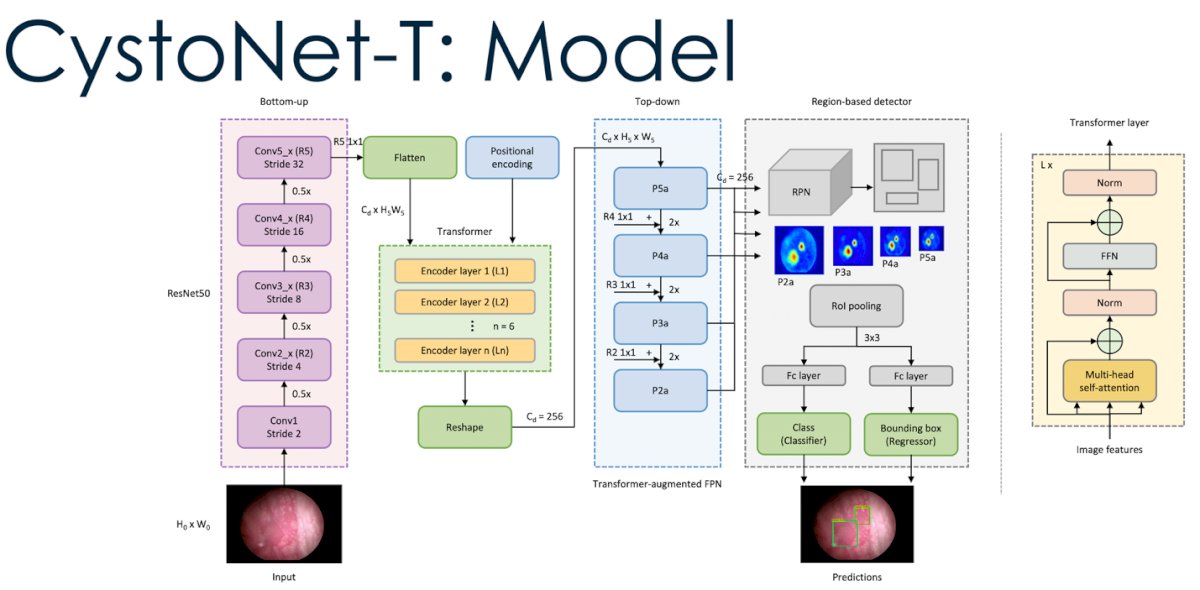

To improve their program, Shkoylar used a clever analogy to approach this challenge from another perspective. Just how words used in language can have greater meaning within a larger context, so can the visuals derived from cystoscopy videos. Shkoylar compared this to language and emphasized that urologists do not base their judgements on a single frame but rather base it off the entire cystoscopy. In the AI world, the mechanism that can analyze information in context is known as transformers. With the ability to compute cystoscopy visual feedback in context rather than by a single frame-to-frame basis, Shkoylar’s team devolved CystoNet-T that utilized this transformer mechanism (see image below).

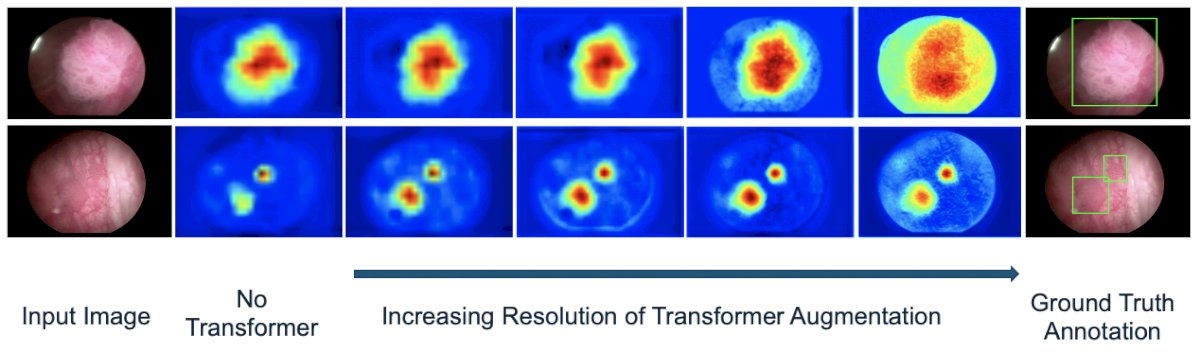

The image below represents CystoNet-T in action. Starting from the input image (left), the system would apply the transformer mechanism to assess the area of the tumor. The second column from the left depicts what AI discovered without transformers, which is contrastingly different from the images in the second column from the right that has utilized the transformer mechanism. With the use of transformers, the generated heat maps had a greater visual heat map of the tumor (almost being too good than it should be by being slightly oversaturated).

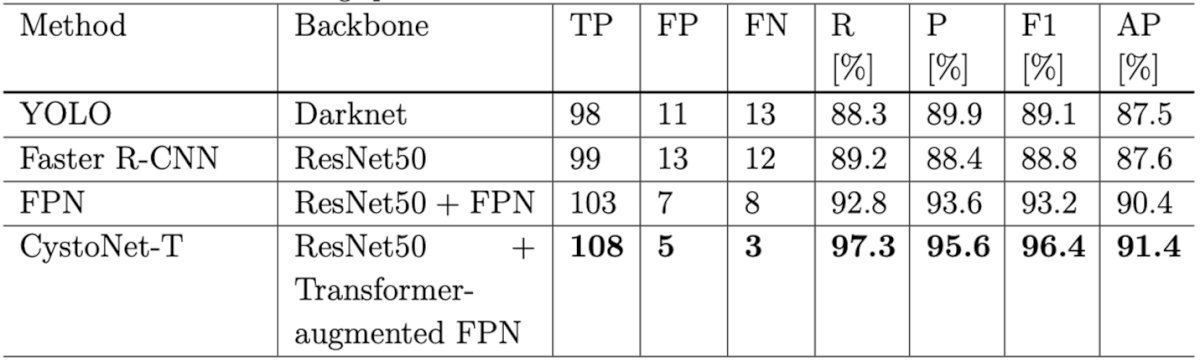

To test Cystonet-T, 54 patients were placed in the training set and 13 were used to validate the method. CystoNet-T achieved a recall of 97.3%, precision of 95.6%, F1 score of 96.4%, and AP of 91.4% (see table below).

Compared to the original CystoNet and benchmark models of Faster R-CNN and YOLO, CystoNet-T outperformed them by 7.3% in F1 score and 3.8% points in AP, performing very well!

Shkoylar did note that although CystoNet-T improved precision, recall, and AP, it is not clear how this would apply to a physician when performing cystoscopy in real time. Additionally, the transformation mechanism requires a great amount of computational power (4 FPS on single GPU NVIDIA GeForce GTX Titan) which delays the analysis to be done retrospectively.

To close, Shkoylar stated that the accuracy of bladder tumors and AI performance improved with the use of transformer mechanisms. Transformer-augmented AI platforms hold promise for a physician’s decision making by enhancing target tumor and lesion identification. He also suggested that this approach could have other potential applications, specifically regarding segmentation.

Post presentation, Dr. Ghazi from the judging table and others in the audience brought up the limitation of the proposed methodology and how difficult/inconvenient it would be if the system could not generate results in a prospective manner. Shkoylar brilliantly replied and emphasized that although this is true, the constant improvement of this technology could soon develop so that these results could be produced in a manner that is convenient and beneficial for the provider. A final question from the audience was about the nature of how data was gathered to which Shkoylar remarked that it due to a herculean effort on multiple fronts to bring this project to fruition.

Presented by: Eugene Shkolyar, MD, Fellow, Stanford University, Stanford, CA

Written by: Seyed Amiryaghoub M. Lavasani, B.A., University of California, Irvine, @amirlavasani_ on Twitter during the 2023 American Urological Association (AUA) Annual Meeting, Chicago, IL, April 27 – May 1, 2023